Protecting consumer rights in the digital society

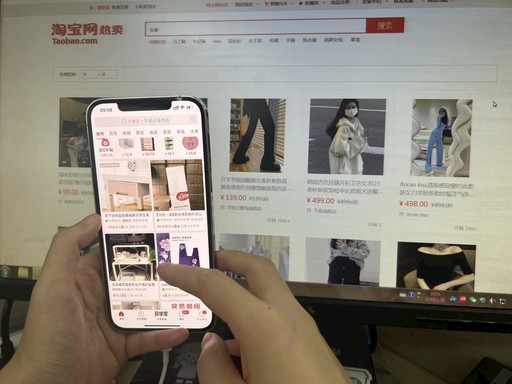

In a digital society, the massive control over information provision is done by algorithmic bubbles, where app service providers selectively push information on the app based on a person’s preferences or habits. Photo: Weng Rong/CSST

Consumer protection rules have a history spanning several centuries, but the systematic provision of institutions has only existed for half a century. The emergence of these new institutions can be attributed to the intensification of information asymmetry and unequal status between trading parties, driven by technological advancements and changes in transaction patterns.

When applied to such substantive unequal trading situations, traditional private law often fails to achieve satisfactory outcomes. This leads to ongoing disputes and high resolution costs. Thus, there arose a need to intervene in transactions from a dispute prevention perspective, which gave rise to consumer protection laws. These laws aim to promote fairness in transactions by addressing the imbalance of information and power between trading parties through mechanisms like tilted rights allocation.

The impact of the digital society on consumer decision-making and its challenges to consumer protection laws are particularly pronounced. Especially noteworthy is the exacerbation of information asymmetry in the digital society, deepening the inherent inequality between trading parties. Traditional practices that harm consumer interests not only persist, but are also exacerbated as businesses harness AI to process large volumes of data and manipulate consumer psychology. Consequently, cognitive manipulation has replaced cognitive misleading as a critical issue in consumer transactions.

Consumer protection in a digital society

In information trade, while providing certain conveniences, the digitization process often exacerbates the degree of information asymmetry. This asymmetry doesn’t primarily arise from the expansion of online transactions, but rather from the application of big data and AI in pre-transaction and transactional contexts that can influence transaction fairness.

For instance, overconfident generative AI models can generate and spread false information, directly harming consumers. Additionally, automated decision-making based on big data and AI can result in information black holes in cases of information asymmetry, intensifying it. With the proliferation of automated decision-making, information asymmetry due to algorithmic black holes has become increasingly common. Information asymmetry requires effective legal responses, which is challenging.

To begin with, consumers often struggle to discern the adverse consequences of algorithm designs, making it difficult to seek individual recourse. Next, traditional solutions for addressing information asymmetry may not be easily applicable. Mandatory information disclosure by businesses faces various implementation obstacles, as disclosing algorithms can harm a company’s operations and pose risks of misuse by competitors. For another, the complexity and technical nature of the disclosed information can pose challenges even for experts. The intricate workings of machine learning algorithms, after extended periods of operation, can be daunting to explain and understand, even for their original designers. Addressing these information asymmetry problems requires innovative measures.

To address the fundamental challenge posed by digitization, we must delve deeper into the erosion of contractual freedom and autonomy of will. How does digitization erode these two? In traditional information asymmetry scenarios, even though the disadvantaged consumers are aware of their information deficit. They know that they are in an information-disadvantaged position, and they are aware that this information gap primarily relates to the object of the transaction. However, while traditional information asymmetry still persists in a digital transaction, new forms of asymmetry emerge. It can be described as an asymmetry in understanding the other party in the trade.

Operators, using big data and real-time technologies, often have a better understanding of consumers than the consumers themselves, placing them at a negotiation disadvantage. Moreover, businesses and their associated industries manipulate the information accessible to the market through algorithms, influencing consumer perceptions. While this may not seem to have a direct impact on contractual freedom and autonomy of will, it substantially undermines these principles in practice in the following ways.

The first way is through controlling accessible information. People are subject to a confirmation bias, which can trap them in an information bubble. Regulating and mitigating the consequences of selectively pushing information is challenging because this selectiveness occurs within the app and is based on user preferences or habits. Additionally, since many apps engage in this practice, even consumers who use multiple apps can have their information acquisition manipulated. Moreover, faced with a vast amount of information, consumers have limited ability to actively seek information. Thus, when combined with selective information psychological mechanisms, app-based selective information pushes make consumers highly susceptible to being trapped in information bubbles. From a legal perspective, the freedom and will of decision-makers are not influenced or restricted. However, decisions always involve information processing, and when information is manipulated, the decision-maker’s freedom of contract and autonomy are naturally compromised. Judging from the results, consumers’ decisions may also not be in their best interests.

The second way is through precision marketing based on big data. Initially, the platformization of transactions brought convenience to consumers. However, as digitization deepens, precision marketing targeting consumers has emerged. With advancements in information tracking technology, data collection, and information processing capabilities, businesses now utilize data obtained through AI and other means to create highly accurate consumer profiles, resulting in increasingly personalized marketing.

Also, to achieve precise personalized marketing, operators may collect a large amount of consumer personal information, raising concerns over personal information protection. While personal information protection is also relevant in traditional transactions, it is not as complex, and existing private law generally suffices. Personal information protection clauses were not a major focus in the “1.0 version” of Consumer Protection Law either, since consumer information couldn’t be aggregated into big data prior to digitization, thus the information held little value. Hence, operators had no motivation to collect it. In a digital society, information acquisition often becomes another objective for operators and sometimes even the main purpose of transactions. Consumers pay for goods and services not only with money, but also personal information.

The third way involves leveraging psychological mechanisms, which can exist and be applied independently of digitization. While businesses in the analogue era also used psychological mechanisms to some extent in their marketing efforts, digitization has created the conditions for the extensive use of these mechanisms.

This is typically achieved by exploiting cognitive biases in transactions. Cognitive biases refer to systematic deviations from the characteristics of a rational person. The platformization of transactions has made it possible for businesses to showcase large amounts of information in their marketing efforts, facilitating the manipulation of cognitive biases in marketing. Unlike traditional marketing, which influences consumer decisions at the rational level, the influence of cognitive biases operates subconsciously. By incorporating cognitive biases into personalized marketing based on anonymous profiles, businesses increase the likelihood of influencing potential consumers through cognitive manipulation.

Marketing utilizes psychological mechanisms, including emotion computation, to influence consumer behavior. With the digitization of the economy, cognitive biases are widely exploited, impacting consumer interests. Emotion computation, a direct product of AI and big data, involves using algorithms to analyze basic information and understand a person’s emotional state. This information is then applied in various ways.

For a long time, the economic assumption that human decisions are purely rational has been problematic. Psychological research show that human decision-making is the result of a combination of rational and emotional factors, with emotions playing a significant role in decision-making. Therefore, the development of AI cannot ignore the realm of “emotion.” In both theoretical research and practical applications, emotion computation based on big data and AI is receiving more attention.

In transactions, businesses use emotion computation to gauge emotional states and modify transaction arrangements accordingly. This could involve providing tailored product recommendations based on real-time psychological conditions of potential consumers. This behavior can either be neutral or detrimental to consumer interests. Existing laws are unable to address this form of cognitive manipulation.

Legislative approaches

In the “2.0 version” of Consumer Protection Law, legislators must consider three important factors. First, while adhering to the principle of balanced legislation, it is imperative to examine and evaluate the balance point. Consumer law is essentially a legal framework that favors consumers, promoting fairness in transactions by balancing the power of both parties to some extent. This legal concept is equally applicable to consumer legislation in a digital society. However, “balance” is not one-sided or absolute. When determining the balance point, we need to consider industrial trends, economic factors, and international experience, all while prioritizing consumer interests. For example, Article 1 of China’s Consumer Rights Protection Law emphasizes protecting consumers’ rights and interests while also mentioning the promotion of healthy economic development. This can be understood as a constraint on the excessive tilting of rights, meaning that the obligations of businesses are not boundless, as there can be no healthy economic development without limitations.

Second, for the determination of contract freedom and autonomy of will, we need to transition from formal judgment to substantive judgment. In future consumer legislation, it’s key to take a more comprehensive view of consumer contract freedom and autonomy of will. We can consider factors such as the information environment, the extent to which information acquisition is manipulated, the prevalence of personalized marketing, and the exploitation of psychological mechanisms, rather than solely relying on superficial judgments of transaction forms. Formal judgment is primarily used in traditional private law systems, while substantive judgment is more common in scenarios involving public intervention. In cases where consumer contract freedom and autonomy of will are eroded, identifying and responding reasonably to these issues is key in future consumer protection legislation. Thus, Consumer Rights Protection Law and related regulations should establish a systematic institutional framework to meet the practical demands of substantive judgment.

Third, we must focus on cognitive manipulation during the consumer process and draw upon research findings from other disciplines. Consumer legislation in a digital society involves many new issues such as big data and AI. Therefore, as the scope of consumer protection law expands, its rules are continually improved. In recent years, China has enacted legislation related to platform monopolies, algorithm regulation, personal information protection, and other cutting-edge issues, all of which involve consumer protection. However, the cognitive manipulation of potential consumers by businesses is a relatively new issue associated with digitization and has not been addressed in the consumer protection laws of most countries. This issue encompasses various fields, including big data, AI, psychology, and marketing. Legislators must draw upon research findings from these other disciplines to understand the underlying mechanisms of cognitive manipulation and develop targeted responses.

Ying Feihu is a professor of Law at Guangzhou University.

Editor:Yu Hui

Copyright©2023 CSSN All Rights Reserved